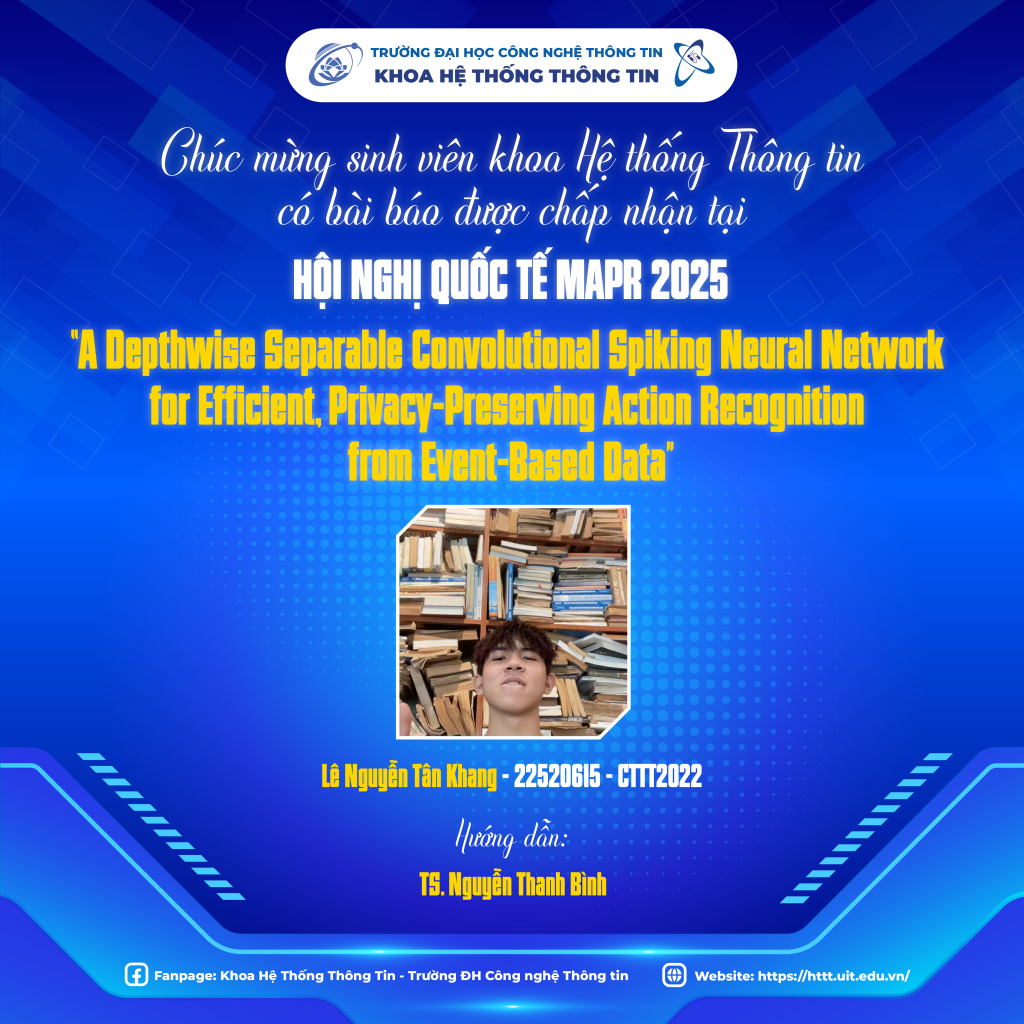

Chúc mừng sinh viên lớp CTTT2022 có bài báo nghiên cứu được chấp nhận tại Hội nghị Quốc tế MAPR năm 2025.

Hội nghị MAPR là hội nghị quốc tế thường niên do Trường Đại học Công nghệ Thông tin (UIT) đồng sáng lập và tổ chức. Đây là hội nghị thuộc Scopus-Index, được tài trợ chuyên môn bởi IEEE. Các bài báo được nhận đăng sẽ được xuất bản tại IEEEXplore. MAPR 2025 sẽ được tổ chức tại Novotel Nha Trang, Khánh Hòa trong 2 ngày 14- 15/08/2025

Link hội nghị: https://mapr.uit.edu.vn/

Tên bài báo: “A Depthwise Separable Convolutional Spiking Neural Network for Efficient, Privacy-Preserving Action Recognition from Event-Based Data”

Sinh viên thực hiện: 22520615 – Lê Nguyễn Tân Khang – CTTT2022

GVHD: TS. Nguyễn Thanh Bình

Abstract: “In modern surveillance systems, ensuring public safety while preserving individual privacy is critical. Traditional video-based action recognition methods often capture detailed visual information, risking privacy violations. Dynamic Vision Sensors (DVS), which record only changes in light intensity, offer an attractive alternative; however, processing such event-based data efficiently remains challenging. This paper proposes DSC-SNN, a novel architecture integrating Depthwise Separable Convolutions (DSC) into a Spiking Neural Network (SNN) framework for efficient event-based action recognition. DSC-SNN leverages the temporal processing capabilities of spiking neurons and the computational efficiency of DSC to extract spatiotemporal features from DVS data while preserving privacy. We conducted experiments and comparative evaluations between our proposed solution and traditional Convolutional Neural Networks approaches on two benchmark datasets: Bullying10K and DVSGesture. In conventional SNN, increasing the number of channels generally leads to improved accuracy, but this comes at the cost of exponentially higher computational demands. Experimental results show that our proposed model achieves up to 98% accuracy with only 64 channels, surpassing traditional neural networks, while reducing computational cost for convolutional layers by up to 80%. This demonstrates that our approach offers a significant improvement, delivering high efficiency and performance in solving real-time visual recognition tasks using SNN.”